Exploring Prompt Injection Attacks, NCC Group Research Blog

Por um escritor misterioso

Last updated 21 dezembro 2024

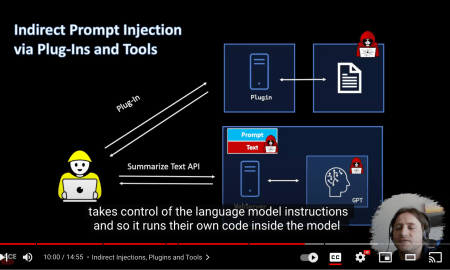

Have you ever heard about Prompt Injection Attacks[1]? Prompt Injection is a new vulnerability that is affecting some AI/ML models and, in particular, certain types of language models using prompt-based learning. This vulnerability was initially reported to OpenAI by Jon Cefalu (May 2022)[2] but it was kept in a responsible disclosure status until it was…

Advanced SQL injection to operating system full control

Farming for Red Teams: Harvesting NetNTLM - MDSec

Reducing The Impact of Prompt Injection Attacks Through Design

Testing a Red Team's Claim of a Successful “Injection Attack” of

Daniel Romero (@daniel_rome) / X

Daniel Romero (@daniel_rome) / X

Black Hills Information Security

👉🏼 Gerald Auger, Ph.D. على LinkedIn: #chatgpt #hackers #defcon

Understanding Prompt Injections and What You Can Do About Them

Recomendado para você

-

Steam Deck Cheat Engine Hack CoSMOS21 dezembro 2024

Steam Deck Cheat Engine Hack CoSMOS21 dezembro 2024 -

How To Download Cheat Engine Without A Virus : r/cheatengine21 dezembro 2024

How To Download Cheat Engine Without A Virus : r/cheatengine21 dezembro 2024 -

Cheat Engine 7.4 SAFE Download : r/cheatengine21 dezembro 2024

Cheat Engine 7.4 SAFE Download : r/cheatengine21 dezembro 2024 -

You can't use Cheat Engine to speed up your game anymore! : r/RotMG21 dezembro 2024

You can't use Cheat Engine to speed up your game anymore! : r/RotMG21 dezembro 2024 -

Reddit Hit by Cyberattack that Allowed Hackers to Steal Source Code21 dezembro 2024

Reddit Hit by Cyberattack that Allowed Hackers to Steal Source Code21 dezembro 2024 -

Pinterest21 dezembro 2024

Pinterest21 dezembro 2024 -

How to Hack: 14 Steps (With Pictures)21 dezembro 2024

How to Hack: 14 Steps (With Pictures)21 dezembro 2024 -

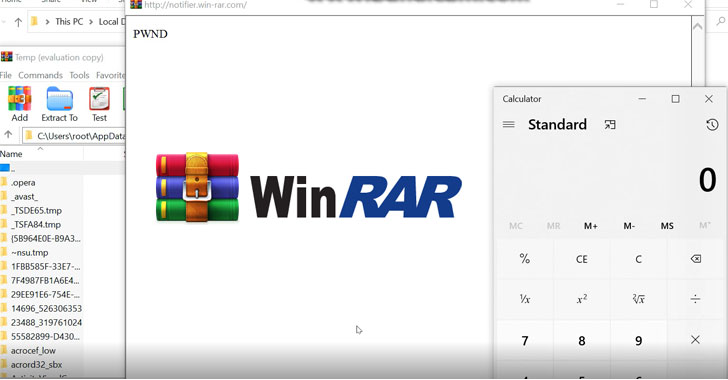

Bug in Popular WinRAR Software Could Let Attackers Hack Your Computer21 dezembro 2024

-

Game Engine! NO ROOT Tweaks Unlock 60-120FPS🔥⚡21 dezembro 2024

Game Engine! NO ROOT Tweaks Unlock 60-120FPS🔥⚡21 dezembro 2024 -

Web Accessibility Monitoring Tools Roundup • DigitalA11Y21 dezembro 2024

Web Accessibility Monitoring Tools Roundup • DigitalA11Y21 dezembro 2024

você pode gostar

-

Samsung Galaxy S21 Ultra 5G SM-G998U1 256 GB 12 GB RAM21 dezembro 2024

Samsung Galaxy S21 Ultra 5G SM-G998U1 256 GB 12 GB RAM21 dezembro 2024 -

ROBLOX FREE ROBUX HACK NO HUMAN VERIFICATION 18 December 202321 dezembro 2024

ROBLOX FREE ROBUX HACK NO HUMAN VERIFICATION 18 December 202321 dezembro 2024 -

MultStore 2.0 - No topo da moda, Temas para E-commerce21 dezembro 2024

MultStore 2.0 - No topo da moda, Temas para E-commerce21 dezembro 2024 -

Playeasy.com21 dezembro 2024

-

Um close de um adesivo de um pequeno pokémon azul e branco generativo ai21 dezembro 2024

Um close de um adesivo de um pequeno pokémon azul e branco generativo ai21 dezembro 2024 -

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/24599140/polygon_loz_ocarina.jpg) Zelda: Ocarina of Time's songs are everywhere in modern music21 dezembro 2024

Zelda: Ocarina of Time's songs are everywhere in modern music21 dezembro 2024 -

UPDATE 4☀️⚫] King Legacy Code: HYDRAGLYPHICS Showcase Awaken Light #l21 dezembro 2024

-

OPTC Guideblog: Vice Admirals Bustercall Raid21 dezembro 2024

OPTC Guideblog: Vice Admirals Bustercall Raid21 dezembro 2024 -

Godzilla earth tree, Godzilla21 dezembro 2024

Godzilla earth tree, Godzilla21 dezembro 2024 -

Sonic the Hedgehog - SEGA Online Emulator21 dezembro 2024

Sonic the Hedgehog - SEGA Online Emulator21 dezembro 2024

![UPDATE 4☀️⚫] King Legacy Code: HYDRAGLYPHICS Showcase Awaken Light #l](https://www.tiktok.com/api/img/?itemId=7162397147118406958&location=0&aid=1988)